Another Tesla “Full Self-Driving” (FSD) crash. Another death. Another round of online social media denials that follow a script as predictable as a Pinto’s design failures.

Take for example a Tesla Model Y that struck and killed a motorcyclist in Washington state while operating in FSD mode. Within hours of the news breaking, Tesla’s online community had deployed its standard “no true Scotsman” fallacy army: this wasn’t real FSD, the investigation is biased, and anyone reporting on it is part of a coordinated attack.

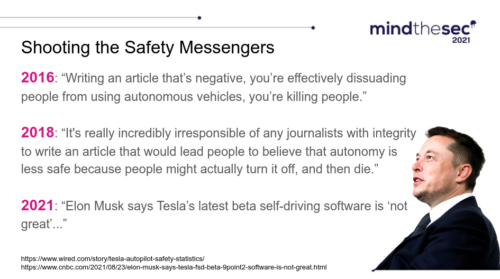

Scripted Donkey Deployments

When an Arizona FSD fatality started getting attention a couple days ago, Reddit’s r/TeslaFSD immediately spun into emergency response:

- This was running 11 which had known issues. We’re on 12 now which completely fixes this problem. Misleading to call this an FSD issue when it’s ancient software.

- Convenient how all these ‘investigations’ happen right before earnings calls. Follow the money – who benefits from Tesla’s stock price dropping?

- The NHTSA has been gunning for Tesla since day one. They never investigate Ford or GM crashes this aggressively. Pure bias.

The pattern of information warfare is consistent across every Tesla FSD fatality: minimize the death, question the timing, attack the investigators. What’s missing is any acknowledgment that experimental software killed someone.

Investigations Branded “Conspiracies”

The National Highway Traffic Safety Administration (NHTSA) didn’t investigate Tesla from 2016 to 2020 because of political corruption.

Then, after Trump no longer could press his thumb on regulators, it opened investigation into Tesla’s FSD technology and started documenting multiple crashes involving the system. This is standard procedure – when a particular technology shows up repeatedly in fatal crashes, safety regulators investigate.

Tesla’s online defenders see coordination where none exists:

- Weird how the Washington Post, Reuters, and CNN all covered this crash within 24 hours. Almost like they’re all reading from the same script 🤔

- Amazing how NHTSA always finds ‘new evidence’ right when Tesla stock is doing well. These people are compromised.

The fact that multiple commenters are saying “it’s coordinated” while following an identical, predictable response pattern is pure irony. They’re the ones who are coordinated – coordinated in their denial.

Their response also treats routine journalism as unusual or suspicious. When a Tesla crashes and kills someone, that’s news. When multiple outlets report on it, that’s how news works. The alternative would be a media blackout on Tesla fatalities, which is apparently what Tesla would prefer. If you report, you violate their implied censorship. It’s kind of like how lynchings worked in 1830s “cancel culture” America.

The Dumb Theseus Game

Perhaps the most cynical aspect of the Tesla fan response is “version” gaming by dismissing every crash as irrelevant because software is updated constantly. This is basically the ship of Theseus, but weaponized. Every nail pounded into a plank means the entire boat is different and thus can’t be compared anymore to any other boat.

- Literally nobody is running v11 anymore. This crash tells us nothing about current FSD capabilities. Yellow journalism at its finest.

- By the time this investigation concludes, we’ll be 3 versions ahead. NHTSA investigating 2-year-old software like it’s current. Completely useless.

This logic renders every Tesla crash investigation meaningless by the dumbest design in history. Since Tesla continuously updates software, no crash can ever be considered relevant evidence of the technology’s safety problems. It’s a perfect unfalsifiability shield.

Most critically, this approach demonstrates that the company is learning nothing from its data. Genuine learning becomes impossible when each software version is treated as a completely fresh start, with no connection to previous iterations.

While Tesla’s defenders seize on the legitimate point that new releases include bug fixes, they distort this into a narrative where each update absolves the company of responsibility for past failures.

This fundamentally misunderstands how software safety works and actively undermines the improvement process. Rather than seeing progressive enhancement, we should expect Tesla’s later versions to perform worse than better—a prediction that appears validated by the company’s accelerating fatality rates.

Deflection Addiction

The online response follows a clear hierarchy in the Tesla playbook of deflection and deception. It should be recognized as a planned and coordinated private intelligence group attack pattern on government regulators and public sentiment:

Level 1: Blame the victim

- “Motorcycle was probably speeding”

- “Driver should have taken over”

- “Darwin Award winner”

Level 2: Dismiss prior software version as unrelated (invalidate learning process)

- “That’s yesterday’s FSD”

- “Tomorrow’s version fixes everything”

- “Misleading headline”

Level 3: Attack independent assessors

- “Government bias”

- “Media conspiracy”

- “Coordinated FUD campaign”

Level 4: Claim victimhood

- “They’re trying to kill our beliefs”

- “Legacy media hates us for disrupting”

- “Short sellers jealously spreading lies”

Notice what’s absent: any substantive discussion of why FSD beta software failed to detect and avoid a car, a pole, a person, a motorcycle… and more people are being killed in “veered” Tesla crashes than ever.

Human Cost Gets Tossed

Fundamental to the beliefs of Peter Thiel and Elon Musk, being raised under southern African white supremacist doctrines by their pro-Nazi families hiding after Hitler was defeated, is their callous disregard for human lives in a quest for white men holding absolute power.

They couldn’t become engineers lawyers or bankers because of ethical boundaries, yet in the computer industry they found zero resistance to murder. Technological enthusiasm is ruthlessly manipulated by them both as a moral blind spot (Palantir and Tesla) where victims are made comfortable with a level of harm absolutely unconscionable in any other context. Each Palantir missile, each Tesla missile, represents real people whose lives have been permanently altered and not some kind of acceptable loss in service of some greater good.

Palantir has likely murdered hundreds of innocent people using extrajudicial assassinations (“self licking ISIS-cream cone“) without any transparency or accountability. Tesla similarly has hundreds of deaths in its careless wake, yet insufficiently examined for predictable causes.

While Tesla fans debate software versions and media bias, the human impact disappears from the conversation. The Washington state crash left a motorcyclist dead and a family grieving. The Tesla driver will likely carry psychological trauma from the incident. These consequences don’t get undone by a software update.

This is just another hit piece trying to slow down progress. How many lives will be saved when FSD is perfected? The media never mentions that.

This utilitarian argument – that current deaths are acceptable in service of future safety – would be remarkable coming from any other industry. Imagine if pharmaceutical companies dismissed drug deaths as necessary sacrifices for medical progress, or if airlines shrugged off crashes as the price of innovation.

Do I need to go on? As an applied-disinformation expert and historian, allow me to explain how Tesla social media techniques reveal tip of a very large and dangerous iceberg in information warfare. The typical response above reveals several problematic patterns of thinking:

- False dilemma: framing this as either “accept current deaths” or “reject all progress,” when there are clearly middle paths involving more rigorous testing, better safety protocols, or different deployment strategies.

- Whataboutism: deflecting from the specific incident by pointing to hypothetical future benefits or comparing to other risks, rather than addressing the actual harm that occurred.

- Sunk cost reasoning: the implication that because development has progressed this far, we must continue regardless of current costs.

-

Abstraction bias: treating real human deaths as statistics or necessary data points rather than acknowledging the irreversible loss of actual lives and the trauma inflicted on families and communities.

This is not random, as much of what I see being spread by Palantir and Tesla propaganda reads to me like South African apartheid military intelligence tactics of the 1970s. Palantir appears to be setup as South Africa’s Bureau of State Secrecy (BOSS) updated to the digital age, with Silicon Valley’s “disruption” rhetoric providing moral cover that anti-communism provided for apartheid-era operations.

Whereas BOSS required extensive human networks to track and eliminate targets, Palantir has evolved to process vast datasets to identify patterns and individuals for elimination with far fewer human operators. Tesla fits into this as a domestic terror tactic to dehumanize and even kill people in transit, which should be familiar to those who study the rise and abrupt fall of AWB terror campaigns in South Africa.

The same mentality that drove around South Africa shooting at Black people, now refined into “self-driving” cars that statistically target vulnerable road users. The technology evolves but the underlying worldview remains: certain lives are expendable.

Real-Time Regulatory Capture

The Tesla fan community has effectively created a form of regulatory capture through social media pressure. Any investigation into Tesla crashes gets immediately branded as biased, coordinated, or motivated by anti-innovation sentiment.

- The same people investigating Tesla crashes drive Ford and GM cars. Conflict of interest much? These investigations are jokes.

- NHTSA investigators probably have shorts positions in Tesla. Someone should check their portfolios.

This creates an environment where egregious unsafe lies are allowed for years, yet legitimate safety concerns can’t be raised for a minute without facing coordinated hordes of accusations of conspiracy or bias.

The result is that Tesla’s experimental software gets treated as beyond criticism, even when it kills people. The disinformation apparatus serves the same function as apartheid-era propaganda – creating a false parallel reality where obvious and deadly violence either didn’t happen, was justified, or was someone else’s fault.

Broken Pattern Continues

BOSS set the model for Palantir, hiding behind ironic national security claims (generating the very terrorists it sponges up massive federal funding to find). Tesla similarly inverts logic, spinning bogus intellectual property claims about a need to bury their safety data with people being killed by their lack of transparency.

…Tesla’s motion argues that crash data collected through its advanced driver-assistance systems (ADAS) is confidential business information. The automaker contends that the release of this data, which includes detailed logs on vehicle performance before and during crashes, could reveal patterns in its Autopilot and Full Self-Driving (FSD) systems.

The patterns Tesla doesn’t want revealed are related to FSD causing deaths. There’s unfortunately a well-documented history of companies in America trying to withhold data under claims of trade secrets or proprietary information, particularly when it could expose dangerous patterns affecting public safety. The most notorious precedent, of course, is the tobacco industry hiding data for 50 years that allegedly caused over 16 million deaths in America alone.

All too often in the choice between the physical health of consumers and the financial well-being of business, concealment is chosen over disclosure, sales over safety, and money over morality. (US Judge Sarokin, Haines v Liggett Group, 1992)

Palantir and Tesla thus follow a playbook to avoid any accountability for unjust deaths using a simple loophole, weaponizing technological enthusiasm and huge corporate legal teams. Each court case iteration drives their extremist political violence apparatus to be invisible, yet embedded deeper into state security control. Tesla, despite failing at basic safety for over a decade, still pushes FSD onto customers who do not understand they’re participating in a public beta test of authoritarian white supremacist warfare where the stakes are measured in human lives rather than software bugs. In 2025 Tesla FSD was reportedly still unable to recognize a school bus and small children directly in front of it on a clear day.

The lawn darts were banned in 1988 after a single child died. They didn’t get software updates. They didn’t have online defenders explaining why each injury was actually the fault of an outdated dart version. They just got pulled from the market because toys aren’t supposed to kill people.

Tesla’s FSD is suspected of killing dozens if not hundreds of people, based on the two proven cases. The response however has been deflection, software updates and social media disinformation. Elon Musk bought Twitter and rebranded it with a swastika, overtly messaging that normal ethical standards don’t apply as long as he can digitally mediate horrible societal harms he’s promoting and even causing himself.

…have you noticed a certain pattern used in Tesla marketing?

- Charge Plugs: 88

- Model Cost: 88

- Average Speed: 88

- Engine Power: 88

- Voice commands: 88

- Taxi launch date: 8/8

The pattern will continue until the regulatory response changes, or until Tesla’s online disinformation army can no longer hide the body count in their unmarked digital mass grave like it’s 1921 in Tulsa again.

Another tragic loss of life, and my condolences go to the family. Each of these FSD deaths deserves serious investigation.

While I support autonomous driving technology as a concept since the 1950s, always just a few years from finally arriving, we need to be honest about where Tesla FSD currently stands:

FSD has at least 2 verified fatalities since the 2020 beta release, with many more crashes under investigation. One reason the number is low has to do with interference from Tesla (hiding the data).

The technology is still in supervised beta testing, meaning human drivers must remain fully attentive and ready to intervene. That makes it impossible to compare with far more capable systems like Waymo. Tesla uses the supervision to hide failures.

Recent deployment attempts in China were suspended after just one week due to numerous traffic violations and regulatory concerns. Basically FSD is so bad, it can’t even get a license.

Rather than dismissing crashes as “old” software or attacking investigators, the Tesla community should:

1. Acknowledge each fatality is a real human loss

2. Support NHTSA investigations to understand failure modes

3. Advocate for more rigorous safety testing before wider deployment

4. Push Tesla to be more transparent about limitations and crash data

The goal should be genuinely safe autonomous driving, not stock games and pumping. If we’re serious about saving lives through this technology, we need to learn from every incident, on every version, not dismiss them to avoid inspection.

The victims of FSD deserve better than being experimented on without consent. We owe it to future road users to demand higher standards.